The growing power and applicability of data science has helped to make the field a juggernaut in both industry and academia. To help prepare students of many different interests for a data-rich world, Prof. David E. Culler, former Chair of the Department of Electrical Engineering and Computer Sciences at the University of California, Berkeley, has been working with his colleagues there to build a new and broad data science education curriculum.

David spoke last summer at a Computing Research Association (CRA) panel session: Data Science in the 21st Century, which Two Sigma Research Lab director Steve Heller co-chaired. David also serves on CRA’s Committee on Data Science, which subsequently published Computing Research and the Emerging Field of Data Science, a blog post laying out key opportunities—and responsibilities—for data scientists as the field advances.

David recently sat down with Steve for a wide-ranging follow-up discussion on the future of data science, the “Berkeley view” of the field, and the biggest challenges for data scientists today.

Steve Heller: All the examples in statistics textbooks are couched in an application domain. Could we view data science as computer science’s coming of age, in the sense that it’s intrinsically tied to serving other disciplines (which we call CS+X)?

David Culler: Lovely question; let’s take it apart a little bit.

The highest goal of computer science is universality, and it’s our greatest strength and also our greatest weakness. When we really have tackled a problem, the solution is applicable very broadly. Statistics has a different character. Statistics departments have always been deeply embedded in domains.

So there are very different kinds of cultural structures, and it’s not that one is better—the two are really quite different. Michael Jordan explains that statisticians kind of embed themselves, whereas computer scientists are often in the role of creating the platform.

Embedding works so well for statistics as, very often, the challenges of a particular application domain get brought back inside the field and push the envelope of statistics, understanding that in one domain to create models you need to idealize data in terms of different distributions than you’ve been using in other domains. So I think that it reveals part of the difference in nature of the two fields.

SH: This is exactly the point I’m trying to come to: that data science happened in some ways outside the university. Industry charged forward, with large data sets and analysis and specific applications, which then went back inside and were understood in the data science community, much in the way you describe statistics occasionally picking up some new direction. And certainly, historically, the two have been different. But the question is, is computer science now taking on a bit of the flavor that statistics always has had?

DC: I do think it’s taking on more of that claim for embeddedness, but I’m not sure that’s the same as its coming of age. I think you’re getting at a different question, which is how deep engagement with actual applications that matter will also open up new frontiers within computer science itself, in the same way that the nature of that relationship does in statistics. Part of our maturation is taking in those learnings and then creating a new platform that enables not just the application that those insights came from but many others, too.

But the other question you raise is “Where did this come from?” One of the big influences was certainly the return from industry into the academic setting, a development that came out with very well formed questions in the context of very large, very ill-formed data. This process has driven an interesting conversation inside of Berkeley: “When did we start doing data science?” Well, you can argue that we started in 1998, when we hired Mike Jordan, Mike Franklin, and Laurent El Ghaoui, but nobody called it that then, right?

Over the nearly twenty years since, you can trace this progression of statistical machine learning and its connections and applications, and some other folks say, “Oh yeah, you know data science—we did that a long time ago!” For example, look at the transformation in computer vision that happened fifteen years ago, which was the big data revolution—maybe the primordial one. Instead of these very sophisticated algorithms on a very small dataset, we were using relatively straightforward algorithms on very, very large datasets.

SH: The same thing happened in search, where there were two camps: semantic and statistical analysis, and Google represented the statistical side, where they had so many examples, and statistical analysis just won.

DC: I think it started as a much more complicated interplay in both directions, and I often hear this. I hear it from folks in information science—that data science came from industry—and it completely fails to recognize that there has been this whole, profound contribution going the other way, and back and forth.

Look at the way we formulate things: the emergence of data parallel operations on extremely large datasets. That was huge, but it didn’t it didn’t get invented with MapReduce.

SH: You mean Map & Reduce, as invented in the late fifties and sixties?

DC: (Laughs) Exactly! What our goal has been—certainly in the systems part of computer science, as well as elsewhere—is to work well enough to be taken for granted. It’s also an opportunity to understand the very long and interwoven gestation cycles that bring these kinds of technologies to the point that you can take them for granted.

SH: Berkeley has made dramatic changes already, embracing a university-wide data science initiative. What is the “Berkeley view?” Is Berkeley’s view of data science unique in academia? Is it universal? Specifically, could you reflect on your experience with Berkeley’s Data 8: Foundations of Data Science class, and in particular comment on the participation of non-CS students as part of the data science + X initiative?

DC: We often publish the “Berkeley view” on X. Let me go back a little bit. When the Moore-Sloan Foundation initiated their data science effort in 2013, they initiated a process that was a little unusual. They didn’t ask for proposals; they asked institutions throughout the country to take inventory of data science on their campus. That was a fabulous exercise, because we discovered that it’s already everywhere in the research domains. That was a reflection of what we had seen in industry for several years. But another message came out from that process. Faculty throughout the disciplines were very clear that there was no way for their graduate students and their future graduate students to obtain the knowledge they needed have in order to really open up the frontiers of their own field.

We realized that the modern research university had fallen behind in what you can argue is its central mission of educating the next generation of researchers. And while it may well have also fallen behind in contributing to the workforce, we have many tools for mediating that: professional masters’ programs, hack-camps, immersion programs, and so on.

But, the research agenda itself was falling into this huge gap. This finding caused us to really consider the question: If you are looking at what every educated person from 2020 forward needs to know to function in this world, what are the elements of that? What’s the role of computing and inference and statistical analysis? How does that become part of these students’ knowledge base, their skill set, what they can use in understanding the world around them? By this, we meant not just students’ being passive consumers of analyses, but having the ability to produce insights, and to produce insights—output—in a way that others can take their output as input.

That’s the power of a computing perspective: you’re but one layer in the stack or one stage in that flow. So, that exercise gave us license to step back and really ask the question: If you were not constrained by all of the contingencies of your existing program, if you were building an education program from scratch that would be accessible to, for example, the entire student body of a place like Berkeley, with all of its diverse backgrounds and interests, how would you approach that? What would you need? What would you want students to learn, and at what stage?

Part of what came out of that exercise was that by learning computing and statistics together in the context of real-world data, the experience with both is fundamentally different. You’re learning computing by doing interesting things on interesting data, rather than solving what you might think of as little puzzles (which is often how we formulate many of the concepts that we want students to internalize), while also making it fun and intellectually and engaging. This produced an entirely different engagement.

Now, to teach many of the mathematical concepts in the underlying statistics, rather than having them be presented first in symbolic form followed by lots of examples, instead, we can apply computational techniques to real data, and concepts emerge, and the symbolic formulation becomes this beautiful way to get a very concise understanding.

I mean, literally sitting in the back of the room when Ani Adhikari finally got to the Central Limit Theorem, I thought the students were going to stand up and say “Halleluiah!” They had observed the behavior of various statistics on data, and here it all came together. Similarly, they experienced and simulated the null hypothesis all semester, so they internalized it. It was not some weird logical formulation.

We came to this notion of building a foundations course that really had these three legs of computing, statistics, and real-world context all deeply integrated. We also realized that no matter how good a job you could do with such a thing, you could not do justice to the huge diversity of students’ interests. You could pay lip service to it, but you really couldn’t do justice to it.

The same applies to the difference in backgrounds. We needed to really take a modular view of the instructional process, and that gave rise to a set of what we call “connector courses” that are designed to be taken concurrently with the foundations course. Taking them concurrently means that there’s a symmetric relationship. It’s not that one is a prerequisite for the other, or that one serves the other.

The perspective is that you are taking the knowledge you’re gaining in the foundations course and applying it into an area that you’re particularly excited about. Maybe that’s global children’s health, or race and policing. As you’re acquiring these new foundational concepts, you’re carrying a perspective built up in experiences that are close to your heart.

You may wonder if that would require faculty to actually design syllabi that “zip” together. They’d have to pay so much attention to what’s going on in the foundation, or the faculty teaching the foundation would have to understand what the connectors would need in sequencing topics, and that’s exactly right. It’s hard, and it’s precisely what we need. You know, we often talk about these Venn diagrams: how do you get these different pieces to work together? The answer is to get them to actually work together. So that process of creating an interwoven educational mesh was arguably as important as the material itself.

SH: So the connector courses have a framework?

DC: That’s exactly right. They do have a framework, which you can think of as the warp and weft. But they also have a pattern, which you could say colors the students’ thinking as they’re acquiring the foundations.

SH: Let’s push on. So, Berkeley’s clearly an academic leader in this new field, and when I look at the broader picture, I see that the White House has appointed D.J. Patil as Chief Data Scientist. Here at Two Sigma we’ve developed technology to collect and process tremendous volumes of diverse data. Moving forward, do you see the possibility of educational-governmental-industrial cooperation, and how can this best be achieved in an environment where many of the efforts in industry involve a lot of intellectual property that can’t easily be shared—either data or methodology—and some part of the governmental data contains confidential information about individuals?

DC: There’s a huge opportunity for the confluence of these three legs. Let’s start with entirely open data—and that’s probably a really valuable place to start—because what we’re seeing is that, as the whole data science world develops, it’s going along with a degree of transparency in governance and society; transparency that’s probably long overdue, and that may even be fundamental to democracy in a connected age.

Obama is the first president to ever write a line of code (probably not terribly many lines). The federal budget is on GitHub. Cities throughout the country are making data available. So much of governance is really that role of shepherding citizens, and historically we’ve had extremely crude processes for collecting information in town halls and meetings and surveys and whatnot. Meanwhile, very little of that information is going the other way. How did the city formulate its traffic plan, or its bicycle plan, or its housing plan, or its health and services plan?

The ability to make these very complicated forms of data broadly available, rather than neatly packaged for a particular purpose, means it is possible for governance or industry to share that with the education community and more generally with the public.

SH: So, you are focusing on transparency and open data.

DC: Yup. That’s a natural starting point for the convergence of the three. Everyplace we move beyond that, we get into a huge mass of very subtle, complicated issues that almost always need to be solved on a case-by-case basis.

SH: Do you see the transparency and openness as an extension or evolution of the open source movement, which is a little bit more established?

DC: It’s both similar and different. Part of what you see in the open source movement is individuals creating software technology that others not only can use, but can build upon and extend. Extending that to data, to open data, I think is a really important insight. It’s not just that it is open and that you can get to it, which without question is an enabler, but also that the derivatives of your work on data may well be the starting points for others. And I think that’s an aspect of the open source community that’s often underappreciated, that each of us is one step in this network. Whether or not that’s true as we look at the very complex stacks that we assemble these days, in the data world, I think it’s even almost more profound as we think about the outputs of our analysis being inputs to further analysis.

There are other questions where the open source and open data movements are really quite different. When we look at open source, we consider what constitutes a creative act, and the copyright and whatnot that go along with that. When we look at data, it’s an observational act, which is quite different.

SH: The CRA post highlights several challenges. I’d like to focus on the problem of fair and ethical data science. In particular, many actors now collect and save everything. Should they continue to do so? Further, how do you envision monitoring happening, to assure that fair and ethical data science is practiced in academia, industry, and government?

DC: So when we look at fairness and ethics, there is collecting everything. There’s also what you do with it. There’s also quite a bit of attention right now to the ways in which the various learning algorithms are capturing a prevailing bias.

So, quite apart from the data and who is holding onto what, that issue, the extent to which our tacit biases become codified in that what shapes our future, is really huge. And it’s important that people understand that that is an inherent challenge in the nature of learning—machine learning or human learning. There’s fortunately quite a bit of attention to that.

SH: Does that become potentially an excuse by which actors dismiss their biases, because they are supported in some sense by the idea of, “I’m not making decisions; this is just what the data says.”

DC: Yeah.

SH: In fact, the data comes from a history of bias.

DC: So there’s both that the data comes from a history of bias, but the learning applied to the data comes from a history of bias; algorithms may well amplify rather than understand that.

For starters, it’s important to understand that that concept is out there, that it is a fact to be dealt with. Just as today you want to get multiple perspectives on a question, it’s going to be very important to get multiple analyses on an underlying set of data. The issue also gets at questions of big, unsolved problems, like the nature of transparency. How are insights derived, and what is the nature of the biases that are codified in those very complex processes? It’s very, very hard to go back through that chain of inference to see where an insight came from.

But you’re touching on another point, as well—and I want to open that up a little bit—of the underlying data itself. Huge amounts of data are being collected everywhere, in ways that few of us might even imagine. Almost every part of life now is leaving some sort of a digital trail that can be the starting point for analysis and inference, and we do not even have a concept, really, of all of the places that some observation of us has gone. Do you know, when you use your frequent flyer card at the supermarket, where all of that information goes? When you use your credit card, it creates a tremendous amount of information about your entire history. Where does that flow in society? None of us have any way of picturing that.

As you look at the legal structure around these questions the closest thing we have is the Fair Information Practices Act, whose central tenet begins with the purpose for which the information was obtained. Part of what we’re seeing here is that the potential set of purposes is so broad and so separated from the point at which the information was obtained in the first place. So purpose and information are almost completely separated today, and I think this is a question that, as a society, we haven’t got our head around at all. The privacy rules of various corporations, they really don’t get at this question of the set of purposes. And it is very hard to circumscribe.

SH: What are some recent big advances and failures in data science, and what are the biggest unsolved problems or unknowns. It sounds like the issue of data provenance, as well as fair and ethical use, is one of the challenges.

DC: Yes. Sometimes we ask the question, “Where did this data come from—what’s the provenance?” And it gets even more interesting when the question becomes, “Where did these analyses of many data come from?” At some level, however, we should be asking yet another question: “Where will this data go?” That’s a much harder unsolved problem.

SH: Is that the converse of access control list? An access control list says, “Can I have this,” rather than “Where can this be allowed?”

DC: We’re touching on I.F.C., information flow control. That’s the other “Can I limit where this goes?” When we think about what we most want to achieve in the growth of data science, the answer almost invariably is discovery, right? All of these wonderful scientific discoveries, wonderful insights into the human condition, into society lead us to another thread of this question. I see a tremendous number of talks about tools, about how to do this visualization or that analysis, and it feels me that, at some level, we’re back in the assembly language days, but with these much bigger objects. What we actually don’t have is any kind of a language that operates at a much higher level, that’s focused on a discovery process that gives us ways of building those discoveries.

So, for huge unsolved problems, we have transparency and languages for really facilitating the discovery process. Looking forward in time and inference, we must ask the question, “If I allow this piece of data to be created, where are all the places in society that some residue of that act will be represented?” I don’t even know how we begin to imagine how to visualize that. But at some level, that’s the question we as citizens would like to be able to understand.

SH: Would you like to pick one recent big data science success to highlight?

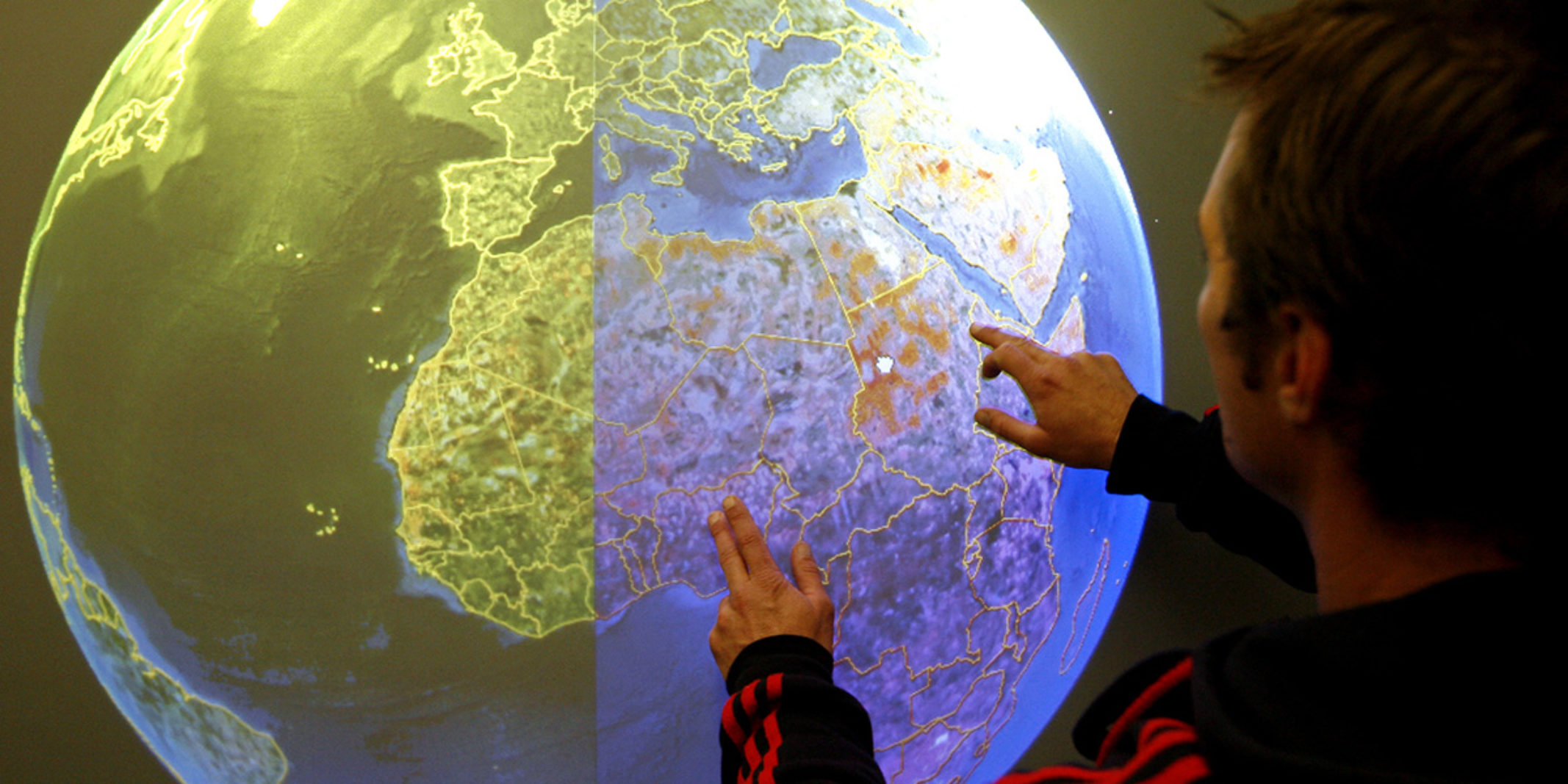

DC: Think about understanding climate change; the problem has many different parts; let’s just take on understanding dirt, understanding the nature of geology throughout the world. We had researchers who have done very deep studies in very little pieces, with no real global view of something even as basic as what the Earth is like. But we now have increasingly large meta-studies that can take thousands of detailed studies, read them—machine read them—and begin to stitch them together to assemble a global map of the earth’s form, from all of these points studies, each of which was done for a different purpose.

I think about the ability to take a large number of microscopic things for a particular purpose and produce a kind of macroscopic understanding for a broader purpose. And that concrete kind of a meta-study has been coming out of the geology community. You see that kind of outlook beginning to play out in many other domains. So, I think that’s a category of ability, to ‘put together’ new and fairly deep knowledge, that’s quite fragmented.

I hear about things like that that are taking place. In combatting malaria you may ask, “What’s underneath that?” It’s not just about understanding mosquitoes. It’s also about hydrology, to understand where the puddles might be, and how that hydrology goes together with epidemiology, to understand how the disease might spread. We’re putting those very disparate disciplines together to understand the particular problem and be able to pinpoint the interventions that would be required to make a difference in malaria.

SH: So you’re highlighting the meta-framework that brought together related, but perhaps previously untied insight sources.

DC: Yes, in order to produce something that then gives you a very clear understanding that you could act on for a particular purpose. You have a finite amount of resources that you can deploy to make a difference in the spread of malaria next year. You really could get tremendous insight if you could put those vantage points together to see exactly what, where, and when.

SH: Any final thoughts? I really appreciate all of your thoughts so far, but you get the last word.

DC: I want to thank you for starting this conversation. I think these are the kind of questions that need to be a much bigger part of the conversation. The focus should not just be the particular tools or the trajectories of various trans-disciplinary studies and peoples, but how they reflect changes in both the world we’re living in and how we might live in that world.