Two Sigma’s mission is to find value in the world’s data on behalf of clients, and we rely on immense computing resources to do so. For example, we

- Store 300+ petabytes of data (more than all the printed information in the world)

- Conduct more than 100,000 market data simulations daily

- Employ processing power measured in zettaFLOPS (think of billions of trillions of calculators, each doing one calculation per second, around the clock)

Clearly all this storage and compute activity consumes a lot of energy, driving up both expenses and carbon emissions. That’s why, beginning in 2022, Two Sigma’s Sustainability Science team began collaborating with various engineering teams within the company to study potential strategies for increasing the efficiency of our computing resources.

The first step was to investigate ways to measure power consumption at the server level accurately, a more complex task than one might assume. As we note in this article about that effort, “If we could make parts of our compute environment more efficient without sacrificing performance, we could both mitigate the company’s contribution to global carbon emissions while potentially reducing overhead costs.”

In 2024 the program matured to include technical case studies that help us understand which green software patterns lower carbon emissions across our compute environments.1 One case study, outlined below, shares how one of our engineering teams leveraged a routine server refresh to reconfigure their resources in a way that meaningfully reduces power consumption while maintaining performance. Focused primarily on maximizing the use of each server, and reducing overall hardware, this initiative highlights a promising approach to reducing the energy-related costs of Two Sigma’s compute resources while lowering our carbon footprint–an approach we hope will inspire other compute-intensive organizations to explore.

Case Study: Software Carbon Intensity of Live Trading Applications

Two Sigma maintains a sizable computing footprint in our own facilities. This grants us operational control over our CPU platforms, enabling us to make conscientious design choices aimed at improving energy efficiency and reducing overall energy consumption. Routine hardware refreshes, where teams transition from obsolete to new hardware, present a natural intervention point to shift towards configurations that are both cost-effective and less carbon-intensive. To validate this, the Sustainability Science team collaborated with a trading engineering team to conduct a retrospective analysis.

Why is a financial sciences company so focused on hardware and electricity?

For Two Sigma, being a responsible corporate sustainability citizen means organizing our deployments to consume fewer resources while achieving the same (or greater) levels of work.

We initially explored this concept here, but let’s take a moment to revisit why less energy-intensive hardware is also less carbon-intensive. Generally, the electricity grid in the U.S., with a few exceptions such as the Bay Area and Seattle, distributes power primarily generated from burning fossil fuels. “Grid carbon intensity” varies across different regions, with some areas having higher emissions than others. This concept is called “emissionality.” and it is becoming increasingly important when thinking about corporate renewable energy and siting renewable energy projects.2

Grid carbon intensity also varies by time of day. The availability of renewable and recyclable energy during trading hours – 9am to 5pm – is very much a work in progress for the grid networks that support the New York Stock Exchange and Chicago Mercantile Exchange. Below is a depiction of the average marginal emissions rate per megawatt-hour (MWh) of consumed electricity, broken down by hour, for the eastern New Jersey and Chicago areas. The grid carbon intensity during trading hours is similar across these two regions.

Energy efficiency during the trading day: challenges and opportunities

Live trading programs require constant access to market data, necessitating that each trading application maintains a processed cache of up-to-date information. Data processing is energy-intensive because it reads from several real-time data sources to provide an accurate, usable set of data. These servers consume electricity for both computations and cooling systems, especially when handling massive amounts of data. And at the most basic level, when electronic circuits toggle, energy flows as the capacitors inside them charge and discharge. Popular efficiency options such as allowing servers to power down when they aren’t executing instructions or shifting workloads to ephemeral cloud solutions are not appropriate for this area of the business.

Software Sustainability Actions

When discussing sustainable software, it’s important to understand a few key terms and how they relate to each other.

First, we aim to reduce the carbon intensity of hardware. This means making the hardware itself more efficient so that it uses less energy and produces fewer carbon emissions. A less carbon-intensive hardware setup ultimately leads to a software system with a lower overall carbon footprint.

To achieve this, we focus on three key actions:

- Energy Efficiency: Actions taken to make software use less electricity to perform the same function.

- Hardware Efficiency: Actions taken to make software use fewer physical resources to perform the same function.

- Carbon Awareness: Actions taken to time- or region-shift software computation to take advantage of cleaner, more renewable or lower carbon sources of electricity

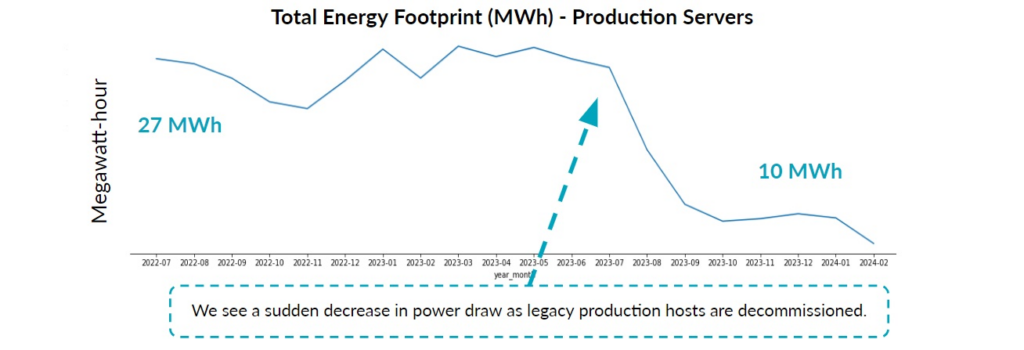

In January 2023, production hosts consumed 27 MWh of electricity. By January 2024, this figure had dropped to 10 MWh.

Success for the engineering team

Instead of opting for a one-to-one server replacement, the trading engineering team featured in this sustainability case study chose to implement a denser hardware configuration that significantly reduced both costs and energy consumption. A denser server has more cores and is an example of hardware efficiency. They gradually decommissioned approximately 60 legacy servers and reduced their absolute power consumption by 66%. The new configuration is not only more efficient but also more resilient and capable of handling a higher workload.

In January 2023, production hosts consumed 27 MWh of electricity. By January 2024, this figure had dropped to 10 MWh.

How the engineers consolidated the deployment

The transition required innovative thinking from platform reliability, virtualization, and networking teams to identify hardware that would meet the trading engineering team’s needs and improve overall system performance. Key assessment factors included cost efficiency, the number of cores per socket, performance, and cache size.

The platform engineering teams recommended 28-core processor hardware. During initial scoping, they considered the possibility of further consolidation by scaling memory and CPU within a single unit. While this approach could have further maximized per server utilization, it would have significantly increased costs and potentially introduced support challenges. Ultimately, they decided to stay within the established capabilities of their preferred vendor to avoid novel issues during live trading and to cap additional spending for negligible consolidation gains.

In the previous configuration, the team noted that the configuration ran one application process per server and each server had a dedicated number of cores responsible for processing and storing market data for the trading application. These smaller servers often had unused cores, as the remaining cores were too few to support a second application.

This means a smaller fleet of higher core servers can handle trading workloads just as effectively, if not more so, than a larger fleet of lower core servers.

In the new configuration, five times more trading programs run per server, sharing one cache of processed market data. It is also lower latency – trades are executed with minimal delay. This means a smaller fleet of higher core servers can handle trading workloads just as effectively, if not more so, than a larger fleet of lower core servers. As a result, not only does the total number of cores needed to process market data decrease, as multiple applications share one cache, the overall capacity to handle trading volume increases due to optimized throughput.

Practitioner take-aways

Sustainable engineering can be business-aligned. By identifying and consolidating underutilized servers, Two Sigma has reduced its operational carbon footprint. The shift to newer, denser production servers in one area of live trading has proven particularly effective. The new deployment strategy means fewer cores are required to process market data, thanks to a denser setup where multiple programs can share cached processed data.

Consolidation is a valid sustainable software strategy because hardware consumes electricity when idle – its baseline power consumption – so these unused cores have an energy footprint even when they aren’t executing a workload. The total static hardware power overhead— i.e. baseline power and cooling systems—is significantly reduced since these power hungry processes scale linearly as the number of machines increase. Also, limiting the number of times a task , i.e. processing market data, must be executed proportionally lowers the energy footprint of an application. These align with at least three green software design patterns: energy efficient framework, optimize average CPU utilization, removed unused assets

Sustainability Deep Dive

Our partner engineering team made several sweeping engineering changes that resulted in a live trading application with a lower carbon intensity. It is the responsibility of the Sustainability Science team to prove which elemental parts of that change were specifically impactful and understand why, so that these might be replicated in future routine hardware refreshes.

Gathering evidence

One of the primary questions the sustainability team addressed was why the removal of unused or underutilized assets had such a significant impact on overall energy footprint. The Green Software Foundation and new developer-centric publications, such as Building Green Software, highlight the benefits of doing so. But how can we quantitatively measure and express the impact of these activities? As you can see in the below figure, for this case study we observe a decrease in power draw as legacy production hosts are decommissioned. The team confirmed that no other major confounding changes occurred at this time.

Data analysis: active vs baseline power consumption

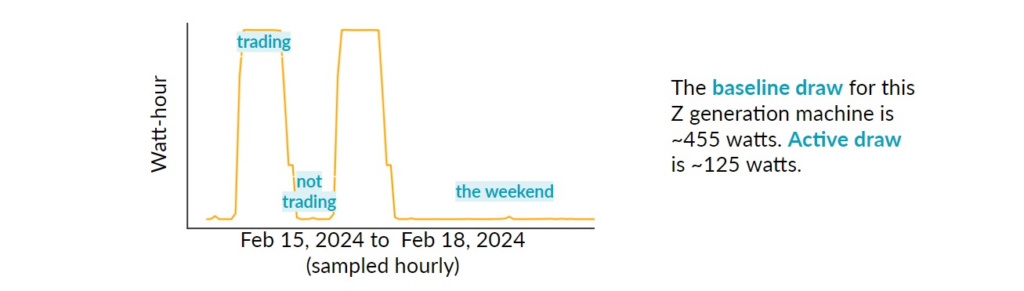

Not all power use supports active workloads. Analyzing our server-level telemetry data, it’s clear that servers draw a significant amount of baseline power while just waiting to be handed instructions. This explains the sharp decline in power totals when a group of servers is unplugged. This also explains why consolidation (where you minimize hardware while maintaining core count) —as well as resource sharing activities like containerization and virtualization—are highly effective strategies for reducing Two Sigma’s energy footprint.

For other organizations looking to apply this analysis to their on-premise technical estate, a simple yet insightful way to see how much power goes to supporting active workloads is to subtract each server’s lowest observed power draw from its hourly power readings. An underutilized server will have nearly 100% baseline power draw regardless of age or size.

Data analysis: server size and age

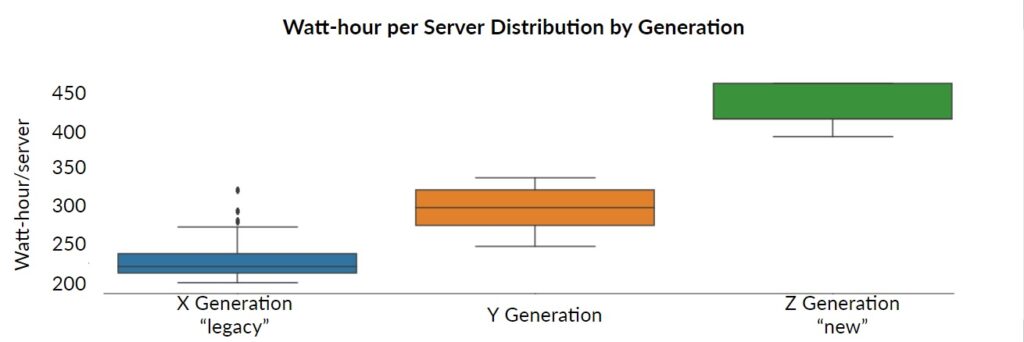

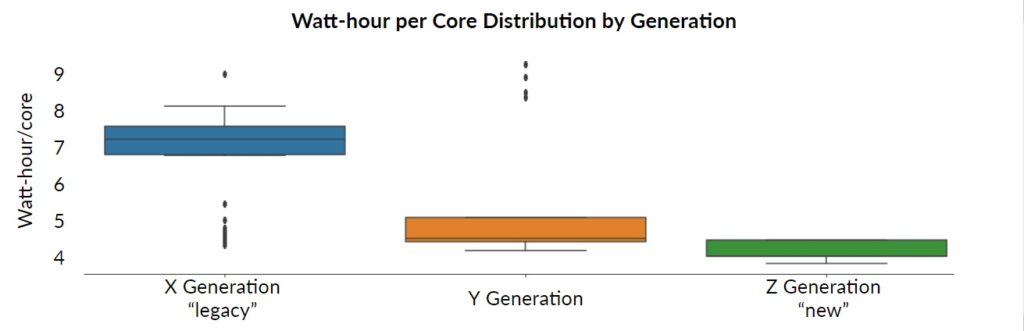

The second question the sustainability sciences team answered was: How can larger servers be more efficient than smaller servers? At first glance, newer Z generation servers consume more baseline power on average: ~450 watt-hour compared to ~225 watt-hour for legacy X generation servers.

However, the Z generation servers have more cores. When you distribute baseline power across a greater number of cores, the per-core power intensity of each machine is actually lower, ~4 watt-hour/core compared to ~7 watt-hour per core. Per server baseline power is like a sunk cost – you pay it no matter what and it scales linearly per additional server.

Data analysis: normalizing per core

Engineering teams often consider application platform design and workload resourcing on a per-core basis, and we now have evidence to suggest that applying this approach to carbon intensity and sustainability performance is equally reasonable. It is particularly useful when comparing and contrasting CPU platforms.

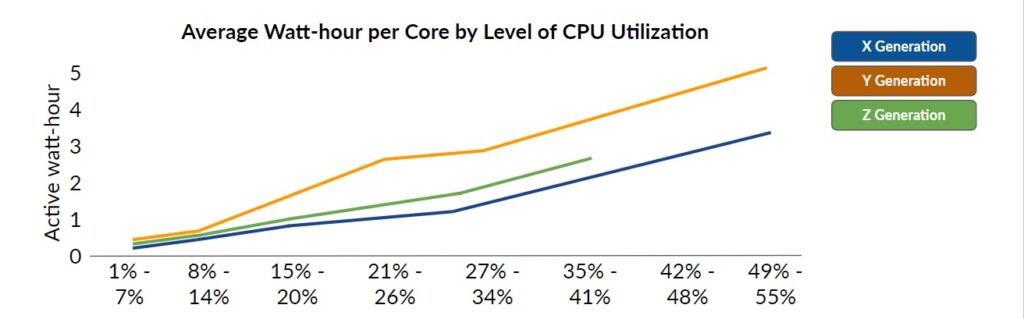

Since Z generation servers are not just larger but newer, part of the improvement in energy efficiency can likely be attributed to advancements made by the manufacturer rather than improvements to the application design.

However, it is also probable that the new configuration is a more efficient framework for the given workload. By comparing active power draw per core relative to CPU utilization rates, we observe that smaller, older-generation machines (Y Generation) exhibit a faster increase in per-core power consumption compared to newer-generation machines (Z Generation) supporting the same workloads. This suggests that newer-generation machines handle workloads more efficiently overall.

Evidence-based insights

Observing the evidence in its totality, the sustainability science team derived several key insights:

- The majority of a server’s power consumption can be attributed to baseline power. When this is the case, removing underutilized assets can have a particularly significant impact.

- A combination of performance metrics has enabled Two Sigma to better understand the effects of software and hardware design choices on energy consumption. Your calculation for baseline power consumption needn’t be perfect to drive actionable insights.

- Pairing CPU utilization metrics with energy metrics can facilitate cross-model or cross-generation analyses.

- Retrospective studies can provide valuable evidence and motivation to advance your software sustainability programs.

Conclusion

Sustainably engineered software is designed, developed and implemented to limit energy consumption and minimize environmental impact. This is just one of the case studies and product integrations Two Sigma’s Sustainability Science and engineering teams have completed this year (stay tuned for our next piece, which will focus on sustainable cloud applications)!

It’s through case studies that sustainable engineering initiatives can isolate the green software patterns that support emissions reduction, and quantify the total and relative changes in emissions. In the long term, Two Sigma’s Sustainability Science team aims to incorporate sustainable compute practices across the organization and to continue to support the broader community of practitioners by making insights and solutions open source.

This particular case study led to a larger policy change where engineering teams are invited to undergo a sustainability rationalization exercise as part of the annual hardware budgeting process. They are given information on their current utilization levels and carbon emissions footprint, and offered support from the sustainability and infrastructure teams to identify and make changes to their applications and configurations.